SaunaFS documentation overview

Introduction

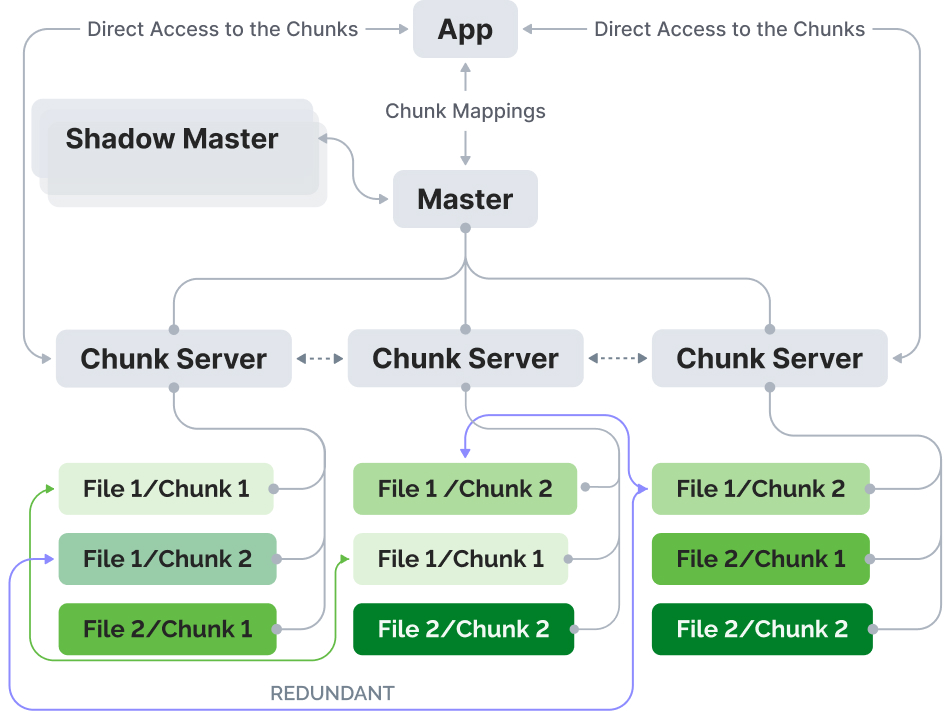

SaunaFS is a distributed POSIX file system inspired by the Google File System, comprising Metadata Servers (Master, Shadows, Metaloggers), Data Servers (Chunkservers), and Clients (supporting multiple operating systems and NFS). It employs a chunk-based storage architecture, segmenting files into 64 MiB chunks subdivided into 64 KiB blocks, each with 4 bytes of CRC (Cyclic Redundancy Check) for data integrity.

The replication goal for specific files and directories can be configured to use Erasure Coding based on Reed-Solomon for redundancy purposes. For instance, a file with 64 MiB of data, with replication goal EC(4,2), will divide the chunk into 4 data parts of 16 MiB and 2 parity parts of 16 MiB. This way, there is no data loss even if two Chunkservers are down or any two parts are lost.

The system also prioritizes data resiliency through data scrubbing and CRC32 checksum verification. Additional features include instant copy-on-write snapshots, efficient metadata logging, and hardware integration without downtime.

Architectural overview of SaunaFS

Important notes

Hardware recommendations

There are no fixed requirements for hardware, although, for better results it is recommended to have:

- 10 or 25 GbE networking

- Bonding (e.g., MC-LAG) across the switches for redundant setup

- Nodes with unified hardware configurations

Sample hardware configuration for node:

- 1x Intel® Xeon® Silver or higher CPU (or AMD equivalent)

- 4x 16GB DDR4 ECC Registered DIMM

- 2x 240GB Enterprise SSD

- 10x Enterprise HDD

- 1x Network Interface Card 25GbE Dual-Port

If unified nodes cannot be provided, at least Master/Shadow nodes should have hardware configuration like sample setup.

Alternatively, you can use the hardware sizer application to calculate hardware requirements for your specific needs: https://diaway.com/saunafs#calc

The suggestion for unified hardware configuration is drawn from the future updates that will introduce the setup with multiple master servers. It is our current effort to introduce distributed metadata enabled architecture to circumvent the limitations imposed by the RAM capacity of a single node within a namespace and also to introduce parallel access to metadata.

The following table will give the estimated amount of RAM occupied by the metadata correlated to the number of files in SaunaFS.

| Number of files | all data structures overhead |

|---|---|

| 1 | 500 B |

| 1000 | 500 KB |

| 1 000 000 | 500 MB |

| 100 000 000 | 50 GB |

| 1 000 000 000 | 500 GB |

Manual pages

This document does not elaborate on every command or configuration option. For comprehensive information, manual (man) files are provided in the Debian packages for both commands and configuration files.

To view a man file for a command, e.g., saunafs-admin:

- man saunafs-admin

To view a man file for a configuration, e.g., sfsmaster.cfg:

- man sfsmaster.cfg

Systemd services

The Debian packages include systemd services for initiating various SaunaFS services. This document assumes the use of these services in its examples.

For systems without systemd, or those choosing not to use it, examining the service files for custom setup or direct command usage is recommended.

Versioning

We use semantic versioning for SaunaFS, where the versions are described as:

MAJ.MIN.PAT

Where:

MAJ - Major version

MIN - Minor version

PAT - Patch version

We guarantee seamless upgrades/downgrades between Minor and Patch versions. Between these kind of versions, no breaking changes will be introduced.

Downgrading is supported by one major version down.

See Migrations on how to handle downgrades/upgrades between major versions

Breaking changes

Changes to these are considered breaking changes, and will be a major version bump

- Changes to behavior in the software as described in the man pages and docs.saunafs.com

- Command-line interface options (as described in the documentation/man pages)

- Default configuration values

- Clients, metadata servers and/or chunkservers must be able to communicate with each other in the same minor and/or patch versions.

- Upgrading/downgrading from any minor version to another minor version in the (for example, from 4.0.1 to 4.2.0 or from 4.2.0 to 4.0.1)

Currently non-breaking changes

Not currently considered as breaking changes (unless it affects the behavior of the points above), but may change in the future

- Binary API interfaces that our clients (sfsmount and saunafs-admin) use to communicate with chunkserver/master.

Non-breaking changes

Will not be considered breaking changes in minor/patch version bumps:

- Internal protocol used by metadata servers, chunkservers and metaloggers to communicate with each other between MAJOR versions.

- Testing related code

- Output logs

- Output of command line executables.

- Improvements to documentation